Stevens Prof Uses Big Data To Transcribe Ancient History

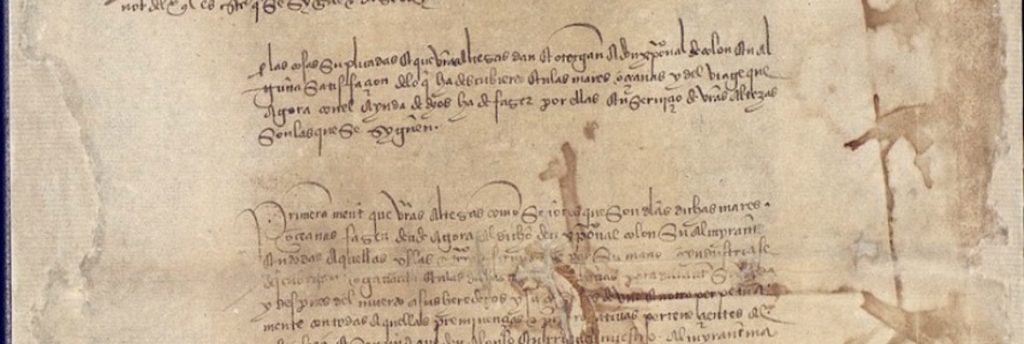

Stevens Institute of Technology recently revealed details about innovative new research being conducted by CS professor and machine learning expert Fernando Perez-Cruz that would analyze and digitize 88 million pages of ancient handwritten documents that might provide insights into questions about “European history, the Conquistadors, New World contact and colonialism, law, politics, social mores, economics and ancestry.”

Perez-Cruz asks, “What if a machine, a software, could transcribe them all? And what if we could teach another machine to group those 88 million pages of searchable text into several hundred topics? Then we begin to understand themes in the material.”

Perez-Cruz hopes to build an “increasingly accurate recognition engine over time” by “teaching software to recognize both the shapes of characters and frequently-correlated relationships of letters and words to each other.” He believes this approach, dubbed ‘interpretable machine learning,’ could be applied to “numerous other next-generation data analysis questions such as autonomous transport and medical data analysis.”

The next step in the process is even more interesting to Perez-Cruz: “the organization of massive quantities of known transcribed data into usable topics at a glance.” He elaborates on how his analysis techniques might interpret three-and-a-half centuries of unstructured data:

“In the end, we might find that there are for example a few hundred subjects or narratives that run through this entire archive, and then I suddenly have an 88-million-document problem that I have summarized in 200 or 300 ideas.”

He concludes, “Once you understand the data, you can begin to read it in a specific manner, understand more clearly what questions to ask of it, and make better decisions.”